BASIC CONCEPTS

Some information for frequently used digital video terms for users who are not deeply familiar with digital video technologies.

I. Signal Types

II.Video Standards

III.Video Compression

IV: File Wrappers

V: Streaming Protocols

VI. Various other Concepts

I. Signal Types

Analog video signals

Analog signals are a representation of time varying quantities in a continuous signal. Basically, a time variance is presented in a manner in which some sort of information is passed using various types of methods. The most common form of analog signal transmission occurs electrically. In order for this to happen, a voltage must be sent at a specific frequency.

Analog video is a video signal transferred by an analog signal. When combined into one channel, it is called composite video. Analog video may be carried in separate channels, as in two channel S-Video and multi-channel component video formats.

Composite Video: Composite (1 channel) is an analog video transmission - without audio - that carries standard definition video typically at 480i or 576i resolution. Composite video is usually in standard formats such as NTSC, PAL and SECAM and is often designated by the CVBS initialism, meaning "Color, Video, Blanking, and Sync."

Component Video: Component video is a video signal that has been split into two or more component channels. In popular use, it refers to a type of component analog video information that is transmitted or stored as three separate signals. Like composite, component-video cables do not carry audio and are often paired with audio cables. When used without any other qualifications, the term component video usually refers to analog YPbPr component video with sync on luma.

Digital Signals

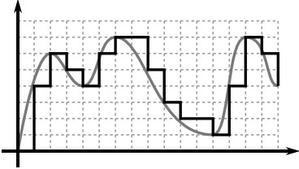

A digital signal refers to an electrical signal that is converted into a pattern of bits. Unlike an analog signal (which is a continuous signal that contains time-varying quantities), a digital signal has a discrete value at each sampling point. The precision of the signal is determined by how many samples are recorded per unit of time.

Digitizing is the representation of an analog signal by a discrete set of its points or samples. The result is called as digital form for the signal. To tell the long story short, digitizing means simply capturing an analog signal in digital form.

grey - analog signal, black - digital signal

DVI: Digital Visual Interface was developed to create an industry standard for the transfer of digital video content. The interface is designed to transmit uncompressed digital video and can be configured to support multiple modes such as DVI-D (digital only), DVI-A (analog only), or DVI-I (digital and analog).

HDMI: High-Definition Multimedia Interface is the first industry-supported uncompressed, all-digital audio/video interface (single cable\single connector). HDMI provides an interface between any audio/video source, such as a set-top box, DVD player, or A/V receiver and an audio and/or video monitor, such as a digital television. HDMI supports SD or HD video, plus multi-channel digital audio on a single cable. It is able to transmit all HDTV standards and supports 8-channel digital audio with bandwidth to spare to accommodate future requirements.

SDI: Serial Digital Interface (SDI) is a video interface typically used in professional applications. It uses standard coaxial video cables with professional BNC connectors to carry video that is encoded as a digital data stream. Since SDI cables originally lacked the capacity to carry a full HD video signal, the standard has been expanded to include higher-bit rates as HD-SDI and finally as 3G-SDI (which is able to transfer data up to 3 Gbits/sec)

II.Video Standards

Standard Definition

Standard-definition television (SDTV) is a television system that uses a resolution that is lower than HDTV (720p and 1080p) or enhanced-definiton television (EDTV - 480p). The two common SDTV signal types are 576i, derived from the European-developed PAL and SECAM systems; and 480i based on the American NTSC system.

NTSC

NTSC,(named for National Television System Committee) is the analog TV standard that is used in most of North America, parts of South America and Pacific. It is developed in 1940s, featuring 525 lines, 60 fields per second as the standard (480i, 29.97 fps). Please note that, as a result of the market going to digital, big majority of over-the-air NTSC transmission is turned off in USA in 2009 and in Canada and most others in 2011.

PAL

PAL (short for Phase Alternating Line) is the analog TV standard which is set for the purpose of overcoming problems of NTSC after the introduction of color TV. It is developed in 1960s, featuring 625 lines, 50 fields per second (576i, 25 fps).

High Definition

High-Definition (HD) provides a resolution that is substantially higher than that of SD.

HDTV may be transmitted in various formats:

720p is a progressive HD signal format with 720 horizontal lines and an aspect ratio of 16:9. All major HDTV broadcasting standards include a 720p format which has a resolution of 1280×720.

1080i 25 is an interlaced HD signal format with a spatial resolution of 1920 × 1080 and a temporal resolution of 50 interlaced fields per second. This actually stands for 25 frames per second (25fps).

1080i30 is an interlaced HD signal format with a spatial resolution of 1920 × 1080 and a temporal resolution of 60 interlaced fields per second. This actually stands for 30 frames per second (30 fps).

1080p is a progressive HD signal format, with 1920 x 1080 spatial resolution and can refer to 1080p 24fps, 1080p 50fps or 1080p 60fps. 1080p format is often marketed as full HD or native HD.

III.Video Compression

When digitized, an ordinary analog video sequence can consume as much as 165 Mbps (million bits per second), equivalent to over 20 MBs (megabytes) of data per second. As may be expected, a series of techniques –called video compression techniques – have been derived to reduce this high bit-rate. The ability to perform this task is quantified by the compression ratio. As the compression ratio gets higher, the bandwidth consumption lessens. Of course, the goal of compression is to reduce the data rate while keeping the image quality as high as possible.

Of course there are advantages and disadvantages of video compression. Some of them can be listed as;

Advantages:

- Occupies less disk space, results in substantially lower costs.

- Reading, writing and file transferring is faster.

- The order of bytes is independent.

Disadvantages:

Requires computing resources, the more complex the algorithm the more resources required.

- Errors may occur while transmitting data.

- Loss in video quality, generation losses

- Video needs to be decompressed to be usable.

What is a ‘codec’ ?

Codec is a short name for coder-decoder, the algorithm that takes a raw data file and turns it into a compressed file (or the inverse-algorithm when it is the case for decompressing). Codecs may be found in hardware (such as in DV camcorders and capture cards) or in software. As compressed files contain only some of the data found in the original file, actually a codec is the necessary “translator” that decides what data makes it to the compressed version and what data gets discarded. In brief, a codec is used to compress and then decompress the content to get the compression needed to work with digital audio and video.

Video content that is compressed using one standard cannot be decompressed with a different standard. This is simply because one algorithm cannot correctly decode the output from another algorithm but it is possible to implement many different codecs in the same software or hardware, which would then enable multiple formats to be compressed.

DV is a codec that is launched in 1995 with joint efforts of leading producers of video camcorders. DV uses lossy compression of video (frame-by-frame basis- intraframe) while the audio stored uncompressed.

Sony and Panasonic have created their proprietary versions of DV;

DVCPRO, also known as DVCPRO25, is developed by Panasonic for use in ENG equipment. A possible important difference from baseline DV is that tape is transported 80% faster, resulting in shorter recording time. DVCPRO50 is developed later, which doubles the coded video data rate to 50 Mbit/s (this of course, cuts the total record time of any given storage medium in half compared to DVCPRO (25 Mbit/s).

DVCAM is developed by Sony for professional use. DVCAM has the ability to do a frame accurate insert tape edit, while baseline DV may vary by a few frames on each edit compared to the preview. Also tape transport is 50% faster than baseline DV.

DVCPRO HD, also known as DVCPRO100 is a HD video codec that can be thought of as four DV codecs that work in parallel. Video data rate depends on frame rate and can be as low as 40 Mbit/s for 24 fps mode and as high as 100 Mbit/s for 50/60 fps modes.

MPEG2

The MPEG-2 (1995) project mainly focused on extending the compression technique of MPEG-1 (1993) to cover larger pictures and higher quality at the expense of a higher bandwidth usage. MPEG-2 also provides more advanced techniques to enhance the video quality at the same bit rate. Of course, the expense was the need for far more complex equipment. MPEG-2 is considered important because it has been chosen as the compression scheme for over-the-air digital tv ATSC, DVB and ISDB, digital satellite TV services, digital cable tv signals, VCD (SVCD), DVD Video and Blu-ray discs.

H.264

H.264 or AVC (Advanced Video Coding) is currently one of the most commonly used codecs for recording, compression and distribution of HD video. The first version of the standard was released in 2003.

The main goal for the initiative was to provide good video quality at substantially lower bit rates (to be precise; an average bit rate reduction of 50% given a fixed video quality compared with any other video standard) with better error robustness than previous standards or better video quality at an unchanged bit rate. The standard is further designed to give lower latency as well as better quality for higher latency.

An additional goal was to provide enough flexibility to allow the standard to be applied to a wide variety of applications: for both low and high bit rates, for low and high resolution video, and with high and low latency demands. Entertainment video including broadcast, satellite, cable and DVD (1-10 Mbps, high latency); telecom services (<1Mbps, low latency); streaming services (low bit-rate, high latency) are included in this variety.

ProRes 422

ProRes 422 is a lossy video compression codec developed by Apple for use in post production, and was first introduced in 2007 with Final Cut Studio 2. ProRes is a line of intermediate codecs, which means they are intended for use during video editing, and not for practical for end-user viewing. The benefit of an intermediate codec is that it retains higher quality than end-user codecs while still requiring much less expensive disk systems compared to uncompressed video.

DNxHD

DNxHD is a lossy HD video compression codec developed by Avid for purposes for both editing and presentation, and was first introduced in 2004 with Avid DS Nitris. DNxHD offers a choice of three user-selectable bit rates: 220 Mbit/s with a bit depth of 10 or 8 bits, and 145 or 36 Mbit/s with a bit depth of 8 bits. DNxHD data is typically stored in an MXF container, although it can also be stored in a QuickTime container.

VC-1

VC-1, which was initially developed as a proprietary video format by Microsoft, was released as a SMPTE video codec standard in 2006. It is today a supported standard found in Blu-ray discs, Windows Media and the now-discontinued HD DVD. It is characterized as an alternative to the video codec H.264. The VC-1 codec specification has so far been implemented by Microsoft in the form of 3 codecs, WMV3, WMVA and WVC1.

NOTE Because VC-1 encoding and decoding requires significant computing power, software implementations that run on a general-purpose CPU are typically slow, especially when dealing with HD video content. To reduce CPU usage or to do real-time encoding, special-purpose hardware may be employed, either for the complete encoding or decoding process, or for acceleration assistance within a CPU-controlled environment. Actually, regarding computation, more or less the same situation applies with H.264.

IV: File Wrappers

A container or wrapper is a metafile format whose specification describes how different data elements and metada coexist in a digital file. Most wrappers can support multiple audio and video streams, subtitles, chapter-information, and meta-data (tags)— along with the synchronization information needed to play back the various streams together.

First-time users often stumble when trying to figure out the difference between codecs and containers (wrappers). A codec is a method for encoding or decoding data. Once the media data is compressed into suitable formats and reasonable sizes, it needs to be packaged, transported, and presented. That's the purpose of containers--to be discrete "black boxes" for holding a variety of media formats. Most wrappers can handle files compressed with a variety of different codecs.

MOV

MOV (Quicktime) is developed by Apple Inc. and first released in 1991. It is capable of handling various formats of digital video, picture, sound, panoramic images and interactivity.

MOV wrapper contains one or more tracks, each of which stores a particular type of data: audio, video, effects, or text (e.g. for subtitles). Each track either contains a digitally encoded media stream (using a specific format) or a data reference to the media stream located in another file. The ability to contain abstract data references for the media data, and the separation of the media data from the media offsets and the track edit lists means that QuickTime is particularly suited for editing, as it is capable of importing and editing in place (without data transfer).

Other file formats that QuickTime supports natively (to varying degrees) include AIFF, WAV, DV-DIF, MP3 and MPEG program stream. With additional QuickTime Components, it can also support ASF, DivX, Flash Video, Matroska, Ogg, and many others.

MPG (MPEG Program Stream)

Program stream (PS or MPEG-PS) is a wrapper format for multiplexing digital audio, video and more. The PS format is specified in MPEG-1 Part 1 and MPEG-2 Part 1. The MPEG-2 Program Stream is analogous and it is forward compatible.

Program streams are used on DVD-Video discs and HD-DVD video discs, but with some restrictions and extensions. The file name extensions are VOB and EVO respectively.

MPEG-2 Program stream can contain MPEG-1 Part 2 video, MPEG-2 Part 2 video, MPEG-1 Part 3 audio (MP3, MP2, MP1) or MPEG-2 Part 3 audio.It can also contain MPEG-4 Part 2 video, MPEG-2 Part 7 audio (AAC) or MPEG-4 Part 3 (AAC) audio, although rarely used. The MPEG-2 Program stream has provisions for non-standard data (e.g. AC-3 audio or subtitles) in the form of so-called private streams.

M2V (MPEG Elementary Stream)

An elementary stream (ES) as defined by MPEG communication protocol is usually the output of an audio or video encoder. ES contains only one kind of data, e.g. audio, video or closed caption. It can be referred as "elementary", "data", "audio", or "video" streams. The format of the elementary stream depends upon the codec or data carried in the stream, but will often carry a common header when packetized into an elementary stream. The file name extension is M2V.

MPEG TS (MPEG Transport Stream)

MPEG transport stream (MPEG-TS, MTS or TS) is a format for transmission and storage of audio, video and PSIP (program and system information protocol) data. It is used in broadcast systems such as DVB, ATSC and IPTV.

Transport stream specifies a wrapper format encapsulating packetized elementary streams, with error correction and stream synchronization features for maintaining transmission integrity when the signal is degraded. Transport streams differ from the program streams (PS) in several important ways: program streams are designed for reasonably reliable media, such as discs (like DVDs), while transport streams designed for less reliable transmission, namely terrestrial or satellite broadcast. Also, a transport stream may carry multiple programs.

MXF

MXF is a wrapper format which supports a number of different streams of coded essence, encoded with any of a variety of codecs, together with a metadata wrapper which describes the material contained within the MXF file.

The development of the Material Exchange Format (MXF) is a quest of collaboration between manufacturers and between major organizations such as Pro-MPEG, EBU (European Broadcasting Union) and AAF (Advanced Authoring Format) Association (first developed in 2000). Main goal was to achieve interoperability of content between various applications used in the television production chain, leading to operational efficiency and creative freedom through a unified networked environment.

MXF bundles video, audio, and program data together (together termed as essence) along with metadata and places them into a wrapper. Its body is stream based and carries the essence and some of the metadata. It holds a sequence of video frames, each complete with associated audio and data essence, plus frame-based metadata. The latter typically comprises timecode and file format information for each of the video frames.

MP4

MPEG-4 Part 14 or MP4 is a multimedia container format standard specified as a part of MPEG-4. It is most commonly used to store digital video and audio streams, especially those defined by MPEG, but can also be used to store other data such as subtitles and still images. Like most modern container formats, MPEG-4 Part 14 allows streaming over the Internet. A separate hint track is used to include streaming information in the file.

MPEG-4 files with audio and video generally use the standard .mp4 extension. M4A, on the other hand, stands for MPEG 4 Audio and is a file name extension used to represent audio files.

WMV

Windows Media Video (WMV) is a video compression format for several proprietary codecs developed by Microsoft. The original video format, known as WMV, was designed for Internet streaming applications. WMV 9 has gained adoption for physical-delivery formats such as HD-DVD and Blu-ray discs.

A WMV file is in most circumstances encapsulated in the Advanced Systems Format (ASF) container format. The file extension .WMV typically describes ASF files that use Windows Media Video codecs. The audio codec used in conjunction with Windows Media Video is typically some version of Windows Media Audio. Although WMV is generally packed into the ASF container format, it can also be put into the Matroska or AVI container format. The resulting files have the .MKV and .AVI file extensions, respectively.

V: Streaming Protocols

Media streaming is the act of transmitting compressed audiovisual content across a private or public network (like the Internet, a LAN, satellite, or cable television) to be processed and played back on a client player (such as a TV, smart phone or computer) while enabling real time or on-demand audio, video and multimedia content.

UDP

User Datagram Protocol (UDP) (designed in 1980) is one of the core members of the Internet protocol suite, the set of network protocols used for the Internet. With UDP, computer applications can send messages, in this case referred to as datagrams, to other hosts on an IP network without prior communications to set up special transmission channels or data paths.

UDP has no handshaking dialogues, and thus exposes any unreliability of the underlying network protocol to the user's program. As this is normally IP over unreliable media, there is no guarantee of delivery, ordering or duplicate protection. It is suitable for purposes where error checking and correction is either not necessary or performed in the application, avoiding the overhead of such processing at the network interface level.

TCP

Transport Control Protocol (TCP) is one of the two original components of the suite, complementing the Internet Protocol, and therefore the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered delivery of a stream of octets from a program on one computer to another program on another computer. TCP is the protocol used by major Internet applications such as the World Wide Web, email, remote administration and file transfer. Other applications, which do not require reliable data stream service, may use UDP, which provides a datagram service that emphasizes reduced latency over reliability.

RTP

The Real-time Transport Protocol (RTP) defines a standardized packet format for delivering audio and video over IP networks. RTP is used extensively in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications, television services and web-based push-talk features.

RTP is designed for end-to-end, real-time transfer of stream data. The protocol provides facility for jitter compensation and detection of out of sequence arrival in data, that are common during transmissions on an IP network. RTP supports data transfer to multiple destinations through IP multicast.

RTMP

Real Time Messaging Protocol (RTMP) was initially a proprietary protocol developed by Macromedia for streaming audio, video and data over the Internet, between a Flash player and a server. Macromedia is now owned by Adobe, which has released an incomplete version of the specification of the protocol for public use. While the primary motivation for RTMP was to be a protocol for playing Flash video, it is also used in some different other applications.

RTMPS

RTMPS, which is RTMP over a Transport Layer Security (TLS/SSL) connection.

RTSP

The Real Time Streaming Protocol (RTSP) is a network control protocol designed for use in entertainment and communications systems to control streaming media servers. The protocol is used for establishing and controlling media sessions between endpoints. Clients of media servers issue VHS-style commands, such as play, record and pause, to facilitate real-time control of the media streaming from the server to a client (Video On Demand) or from a client to the server (Voice Recording).

WEBRTC

WebRTC (Web Real-Time Communication) is a free, open-source project providing web browsers and mobile applications with real-time communication (RTC) via simple application programming interfaces (APIs). It allows audio and video communication to work inside web pages by allowing direct peer-to-peer communication, eliminating the need to install plugins or download native apps.

Microsoft Smooth Streaming

IIS Media Services extension is is a web server software application and set of feature extension modules created by Microsoft for use with Microsoft Windows. Smooth Streaming is an IIS Media Services extension that enables adaptive streaming of media to clients over HTTP.

Adaptive bit rate streaming is a technique used in streaming multimedia over computer networks. While in the past most video streaming technologies utilized streaming protocols such RTP with RTSP, today's adaptive streaming technologies are almost exclusively based on HTTP and designed to work efficiently over large distributed HTTP networks such as the Internet.

It works by detecting the user bandwidth and CPU capacity in real time and adjusting the quality of a video stream accordingly. It requires the use of an encoder which can encode a single source video at multiple bit rates. The player client switches between streaming the different encodings depending on available resources. Small buffering, fast start time and convenience with both high and low-end connections are marketed results.

IIS Media Services 4.0, released in November 2010, introduced a feature which enables Smooth Streaming H.264/AAC videos, both live and on-demand, to be dynamically repackaged into the Apple HTTP Adaptive Streaming format (HLS or HTTP Lossless) and delivered to iOS devices without the need for re-encoding.

HLS (HTTP Streaming)

HTTP Live Streaming (also known as HLS) is an HTTP based media streaming communications protocol implemented by Apple Inc. as part of their QuickTime and iOS software. It works by breaking the overall stream into a sequence of small HTTP-based file downloads, each download loading one short chunk of an overall potentially unbounded transport stream. As the stream is played, the client may select from a number of different alternate streams containing the same material encoded at a variety of data rates, allowing the streaming session to adapt to the available data rate.

Since its requests use only standard HTTP transactions, HTTP Live Streaming is capable of traversing any firewall or proxy server that lets through standard HTTP traffic, unlike UDP-based protocols such as RTP.

VI. Various other Concepts

Closed Caption

Closed captioning is embedded in the television signal and becomes visible when you use a special decoder, either as a separate appliance or built into a television set. The decoder lets viewers see captions, usually at the bottom of the screen, that will tell them what is being said or heard on their favorite TV shows. In general, an onscreen menu on TV sets allows you to turn closed captioning on or off. Open captions, in contrast, are an integral part of a transmission that cannot be turned off by the viewer.

DVB Subtitle

DVB stands for Digital Video Broadcasting, and this technology relies on the viewer having a suitable digital receiver either as a standalone Set Top Box (STB) or built into their TV set. The transmission method may either be digital terrestrial (DTT), satellite, or cable distribution. As its name implies, DVB uses all digital signals throughout the transmission path. Subtitles are generated using a similar technique to open subtitling and then formatted as graphics (bit-maps). This data is then transmitted as part of the station’s output transport stream to the set-top box decoders. Once a decoder receives the data the subtitle is then re-constructed in the decoder’s memory. A user can select whether to display subtitles or not and, if available, which one of several languages. At the appropriate time the decoder will then display the subtitle on-screen.

RS 422

RS-422 is a telecommunications standard for binary serial communications between devices. It is the protocol or specifications that must be followed to allow two devices that implement this standard to speak to each other. RS-422 is an updated version of the original serial protocol known as RS-232. One device will be known as the data terminal equipment (DTE) and the other device is known as data communications equipment (DCE). For instance, in a typical example of a serial link between the computer and printer, the computer is the DTE device and the printer is the DCE device.

VITC

VITC is a form of SMPTE timecode embedded as a pair of black-and-white bars in a video signal. These lines are typically inserted into the vertical blanking interval of the video signal. There can be more than one VITC pair in a single frame of video: this can be used to encode extra data that will not fit in a standard timecode frame.

VITC contains the same payload as SMPTE linear timecode frame embedded in a new frame structure with extra synchronization bits and an error-detection checksum. The VITC code is always repeated on two adjacent video lines, one in each field. This internal redundancy is exploited by VITC readers, in addition to the standard timecode "flywheel" algorithm.

GPI

At the time of its development, General Purpose Interface (IEEE-488) was particularly well-suited for instrument applications when compared with the alternatives. In essence, IEEE-488 comprises a “bus on a cable,” providing both a parallel data transfer path on eight lines and eight dedicated control lines. Given the demands of the times, its nominal 1 Mbyte/sec maximum data transfer rate seemed quite adequate; even today, IEEE-488 is sufficiently powerful for many highly sophisticated and demanding applications.

Today, IEEE-488 is the most widely recognized and used method for communication among scientific and engineering instruments. Major stand-alone general purpose instrument vendors include IEEE-488 interfaces in their products. Many vertical market instrument makers also rely on IEEE-488 for data communications and control.